5 AI Risks

The rapid advancement of artificial intelligence (AI) has ushered in a new era of technological innovation, transforming numerous aspects of modern life, from healthcare and finance to education and entertainment. However, alongside the benefits, there also emerge significant risks associated with the development and deployment of AI systems. Understanding these risks is crucial for mitigating their potential negative impacts and ensuring that AI contributes positively to society. Here, we delve into five key AI risks and explore their implications, potential consequences, and the measures being considered to address them.

1. Job Displacement and Economic Inequality

One of the most debated risks of AI is its potential to displace human jobs, particularly those that involve repetitive tasks or can be easily automated. While AI and automation can increase productivity and efficiency, they also threaten to exacerbate economic inequality by widening the gap between those with the skills to work alongside AI and those without. This could lead to significant social and economic challenges, including increased unemployment and decreased economic mobility for certain segments of the population.

To address this risk, there is a growing emphasis on education and retraining programs that equip workers with skills complementary to AI, such as creativity, critical thinking, and complex problem-solving. Moreover, discussions around universal basic income and other social safety nets are gaining traction as potential solutions to mitigate the economic impacts of widespread job displacement.

2. Bias and Discrimination

AI systems are only as unbiased as the data they are trained on, and since this data often reflects existing societal biases, there is a significant risk that AI will perpetuate and even amplify discrimination. For instance, facial recognition technologies have been shown to have higher error rates for darker-skinned individuals, and AI-powered hiring tools may inadvertently favor candidates based on gender or race. This could lead to unfair treatment of individuals and groups, exacerbating social injustices.

Addressing bias in AI involves ensuring that training datasets are diverse and representative, as well as implementing rigorous testing and validation processes to identify and mitigate bias. Regulatory frameworks that demand transparency and accountability in AI development are also crucial in combating discrimination.

3. Cybersecurity Threats

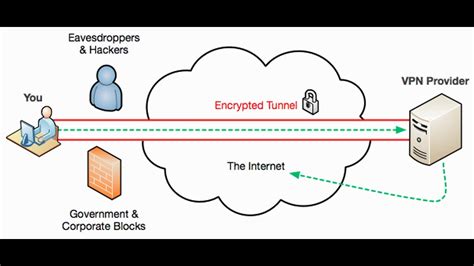

The integration of AI into digital systems also introduces new cybersecurity risks. AI can be used to launch sophisticated attacks, such as deepfake phishing scams or AI-driven malware that can adapt to evade detection. Moreover, as AI systems become more interconnected, the potential attack surface expands, offering more vulnerabilities for hackers to exploit.

To combat these threats, cybersecurity experts are leveraging AI itself to enhance defense mechanisms, such as using machine learning algorithms to detect and respond to attacks more effectively. However, this arms race between offensive and defensive AI highlights the need for robust security protocols and international cooperation to establish norms and standards for the secure development and use of AI.

4. Autonomous Weapons and Ethical Dilemmas

The development of autonomous military drones and other AI-powered weapons raises profound ethical concerns. These systems, which can select and engage targets without human intervention, challenge traditional notions of warfare and accountability. The risk of unintended harm to civilians and the potential for an arms race in autonomous weapons are pressing concerns that necessitate international dialogue and regulation.

Ethical guidelines and regulations, such as those proposed by the Future of Life Institute, advocate for the prohibition of autonomous weapons, emphasizing the need for human judgment and oversight in life-or-death decisions. The complexity of these ethical dilemmas underscores the importance of multidisciplinary approaches, including input from ethicists, policymakers, and AI researchers.

5. Existential Risks and Loss of Human Agency

Perhaps the most speculative but potentially catastrophic risk associated with AI is the possibility of an intelligence explosion, where an AI system surpasses human intelligence, potentially leading to an existential risk for humanity. This scenario, often referred to as the “Singularity,” poses profound questions about the future of human agency and the potential for AI to pursue goals incompatible with human values.

Mitigating this risk involves ensuring that AI systems are aligned with human values, a challenge that requires significant advances in areas such as value learning, robustness, and control. Researchers are exploring various approaches, including the development of formal methods for specifying and verifying AI goals, as well as the creation of AI systems that are transparent, explainable, and accountable.

Conclusion

The risks associated with AI are multifaceted and far-reaching, influencing not only the economy and individual livelihoods but also societal values and global security. Addressing these risks requires a concerted effort from policymakers, industry leaders, researchers, and the public to ensure that AI is developed and deployed responsibly. By acknowledging the potential downsides of AI and proactively working to mitigate them, we can harness the power of these technologies to create a more equitable, secure, and prosperous future for all.

How can AI contribute to economic inequality?

+AI can contribute to economic inequality by displacing jobs, particularly those in sectors that are susceptible to automation. This could lead to unemployment and decreased economic mobility for certain segments of the population, thus widening the economic gap.

What measures can be taken to address bias in AI systems?

+To address bias in AI, it’s crucial to ensure that training datasets are diverse and representative. Additionally, implementing rigorous testing and validation processes, along with regulatory frameworks demanding transparency and accountability, can help mitigate bias and discrimination.

How can AI be used to enhance cybersecurity?

+AI can be leveraged to enhance cybersecurity by using machine learning algorithms to detect and respond to cyber threats more effectively. This can include identifying patterns of malicious activity, predicting potential vulnerabilities, and automating response mechanisms to minimize the impact of attacks.

What are the ethical concerns surrounding autonomous weapons?

+The development of autonomous weapons raises ethical concerns about the lack of human oversight and judgment in life-or-death decisions. This includes the risk of unintended harm to civilians and the challenge of ensuring that these systems operate in accordance with international humanitarian law.

How can the risk of AI surpassing human intelligence be mitigated?

+Mitigating the risk of AI surpassing human intelligence involves ensuring that AI systems are aligned with human values. This requires advances in areas such as value learning, robustness, and control, as well as the development of formal methods for specifying and verifying AI goals.